Data Rescue with AI

Google Gemini has changed the game

There are billions of historical weather observations worldwide that are still only available as paper records or, if we’re lucky, scanned images.

We have been patiently waiting for AI to provide the tools to extract the observations from such images. And I think we’re nearly there. (Philip Brohan has already said this.)

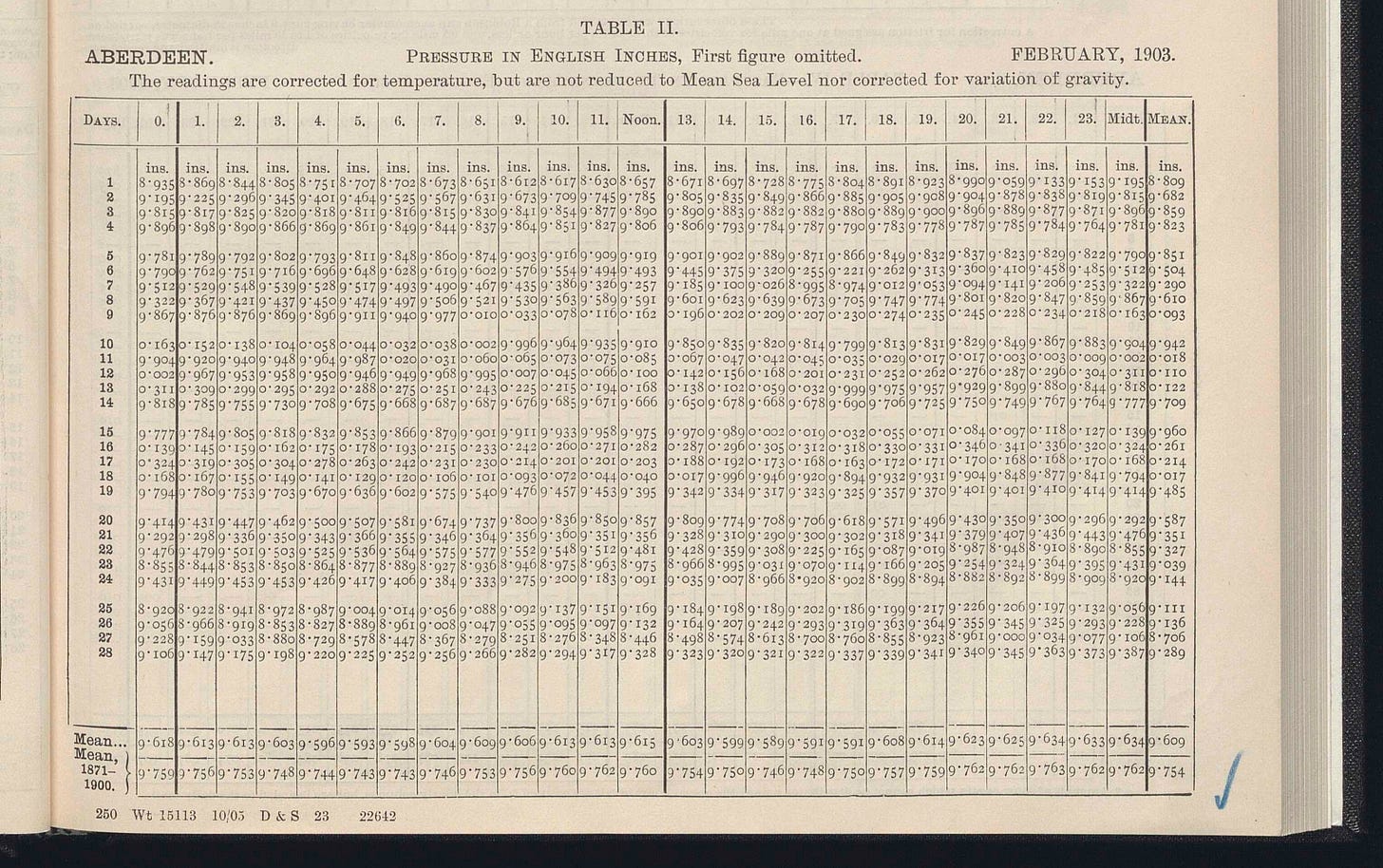

Take this table - hourly pressure observations taken in Aberdeen (UK) in February 1903 - as an example. There are hundreds of these scanned typeset tables available, but no-one has ever transcribed the measurements.

If you send images like this to Google Gemini (v2.5-pro-experimental) and ask to extract the pressure observations it will return every observation in structured JSON format.

We’ve done this for 144 such tables. This has cost around £0.10. Yes, 10p.

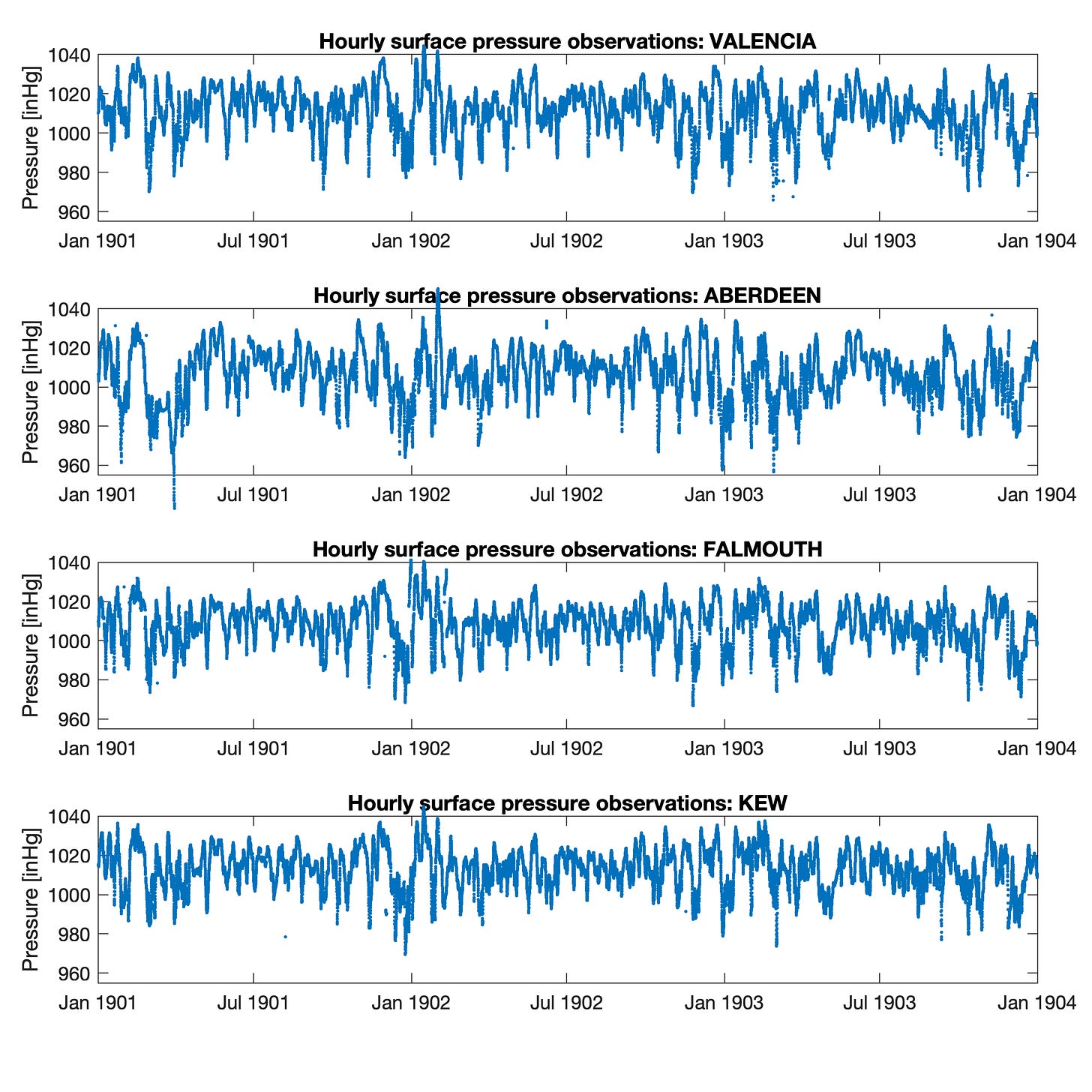

And, below is the result, with no quality control checks. Thousands of pressure observations from 4 locations across 3 years - 1901 to 1903.

The data are clearly not perfect. There are occasional misplaced dots, and sometimes a sequence of observations are 1 in/Hg (33.86mb) out - a common error when transcribing such data, even manually. There is consistency across the stations, adding confidence in the transcriptions.

Some more work is needed to automate some of the quality control steps but this data is almost usable for assimilation into a reanalysis as is.

But what about a harder hand-written example? There are hundreds of thousands of UK ‘climatological returns’ sheets, containing daily or twice-daily observations of many different weather variables. These were all originally posted by mail from the observers to the Met Office and are now scanned in the digital Met Office archives.

This one is from 1859. In the Shetland Islands.

If we ask Google Gemini to extract the barometer observations for each day for 9am and 9pm, it returns this JSON file

[

{"Day": 1, "9h AM": 28.855, "9h PM": 28.950},

{"Day": 2, "9h AM": 29.282, "9h PM": 29.380},

{"Day": 3, "9h AM": 29.266, "9h PM": 29.210},

{"Day": 4, "9h AM": 29.190, "9h PM": 29.101},

{"Day": 5, "9h AM": 28.850, "9h PM": 28.660},

{"Day": 6, "9h AM": 28.720, "9h PM": 28.814},

{"Day": 7, "9h AM": 28.742, "9h PM": 28.930},

{"Day": 8, "9h AM": 29.250, "9h PM": 29.700},

{"Day": 9, "9h AM": 30.048, "9h PM": 30.390},

{"Day": 10, "9h AM": 30.525, "9h PM": 30.500},

{"Day": 11, "9h AM": 30.406, "9h PM": 30.222},

{"Day": 12, "9h AM": 30.126, "9h PM": 29.980},

{"Day": 13, "9h AM": 30.010, "9h PM": 30.050},

{"Day": 14, "9h AM": 30.128, "9h PM": 30.233},

{"Day": 15, "9h AM": 30.354, "9h PM": 30.410},

{"Day": 16, "9h AM": 30.360, "9h PM": 30.230},

{"Day": 17, "9h AM": 30.000, "9h PM": 29.760},

{"Day": 18, "9h AM": 29.650, "9h PM": 29.776},

{"Day": 19, "9h AM": 29.810, "9h PM": 29.530},

{"Day": 20, "9h AM": 29.830, "9h PM": 29.780},

{"Day": 21, "9h AM": 29.540, "9h PM": 29.280},

{"Day": 22, "9h AM": 29.540, "9h PM": 29.830},

{"Day": 23, "9h AM": 29.840, "9h PM": 30.040},

{"Day": 24, "9h AM": 30.070, "9h PM": 30.070},

{"Day": 25, "9h AM": 30.050, "9h PM": 29.840},

{"Day": 26, "9h AM": 29.710, "9h PM": 29.475},

{"Day": 27, "9h AM": 29.186, "9h PM": 29.495},

{"Day": 28, "9h AM": 29.480, "9h PM": 29.380},

{"Day": 29, "9h AM": 29.494, "9h PM": 29.520},

{"Day": 30, "9h AM": 29.530, "9h PM": 29.700}

]

Which is almost perfect. It has misread a small number of the hard-to-read 8s as 5s. But it is spot-on apart from that. No hallucination.

A revolution is here. I think we will soon be able to rescue historical weather observations data much more efficiently and quickly.

Very cool Ed. Would be interesting for an agent to try this and it self reflect and look for anomalies etc. it sounds like the foundational models are almost there. I imagine you could fine tune a model on this data to see if performance increases but AI releases come out every 3 months or so perhaps they will start to have more historical data like this in their training datasets